We all know that ML requires a lot of computing speed and even cloud services are required on large scale. This blog covers details about how to integrate ML and run TensorFlow on a microcontroller that will help in saving a lot of costs. This blog emphasises that this doesn’t need high computing power if we learn to use Tiny ML effectively.

So, all this started with a problem, I wanted to control the environmental conditions of a plant using Machine Learning, but surprisingly the Microcontroller i.e. ESP32 have just 512 kB of space and my model itself was 1.23 MB. So the question is, how can I use that model in my ESP32 board?

After some research, I got to know about Edge Computing which refers to the process of performing data processing and storage at the edge of a network, rather than in a centralized location such as a data centre. This allows for faster and more efficient data processing, as well as reduced latency and bandwidth usage.

For example, Self-driving cars rely on edge computing to process sensor data in real-time, allowing them to make quick decisions and navigate safely.

Talking about the advantages of Edge computing, here are a few -

Reduced Latency: Edge computing minimizes the distance data must travel, reducing latency and enabling real-time decision-making.

Improved Performance: Edge computing can handle large amounts of data generated by IoT devices and other sources, improving the overall performance of the system.

Increased Security: Edge computing can improve security by reducing the amount of sensitive data that needs to be transmitted over the network.

Cost Savings: Edge computing has the potential to reduce costs and increase efficiency by reducing the amount of data that needs to be transmitted and stored in the cloud.

Offline Operation: Edge computing enables devices to continue to operate even when disconnected from the network, providing continuity of operations in remote or offline locations.

Increased Reliability: Edge computing can provide a more reliable solution by reducing the risk of data loss due to network outages or other disruptions.

Scalability: Edge computing can be scaled easily as the number of devices and data sources grows, without requiring significant changes to the central infrastructure.

Increased Flexibility: Edge computing allows for the deployment of machine learning models and other advanced technologies at the edge, enabling more flexible and innovative solutions.

Delving more into the problem, Another concept that can help me reach the solution is Tiny ML. It is a subset of the broader field of machine learning (ML) that focuses on implementing ML algorithms on small, low-power devices such as microcontrollers, sensors, and embedded systems.

So, my approach to the solution

Deploy Model to cloud: This requires internet that might not be available always to the ESP32.

Using Model Directly: Again space issues?

Tflite

TensorFlow Lite (TFLite) is a lightweight version of TensorFlow, an open-source machine learning framework developed by Google. It is specifically designed to run on resource-constrained devices such as mobile phones, embedded systems, and microcontrollers.

Refer to this colab notebook- https://colab.research.google.com/drive/1P3KrhuXFPjKb8Ub9lLcnSek2KZH1xQ4k?usp=sharing

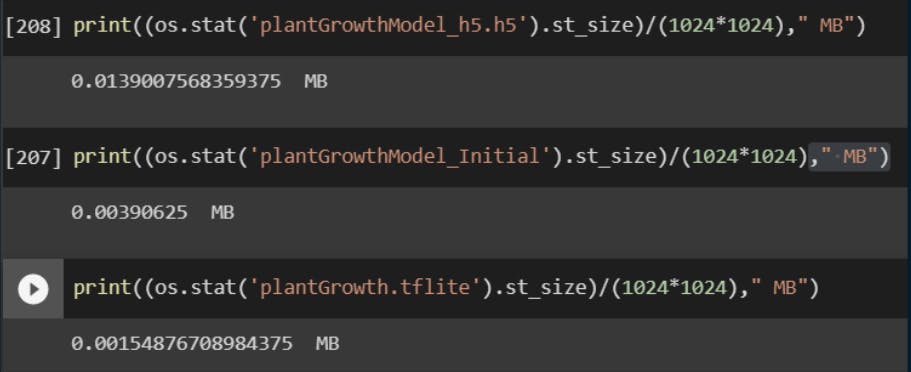

This colab shows how to reduce the size of our model.

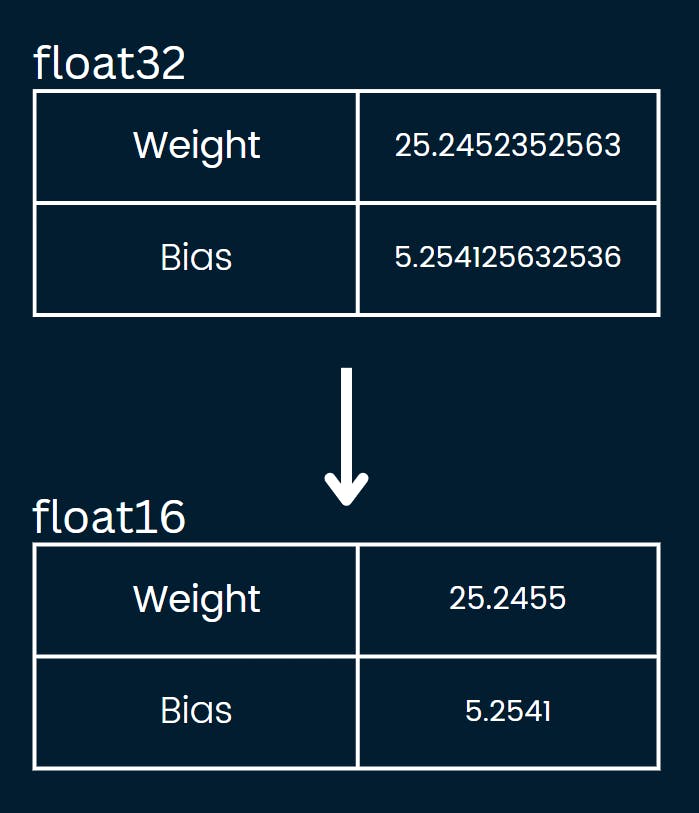

The repository uses the process of Quantization

Quantization is the process of converting a continuous, high-precision representation of a signal or data into a lower-precision, discrete form. The goal of quantization is to reduce the memory and computational requirements of a machine-learning model, without significantly affecting its accuracy. This is achieved by reducing the number of bits used to represent the data, thus reducing the number of possible values that can be represented. Quantization can be applied to weights, activations, and gradients in a neural network, and is a common technique used in hardware acceleration and deployment of deep learning models on embedded devices with limited resources.

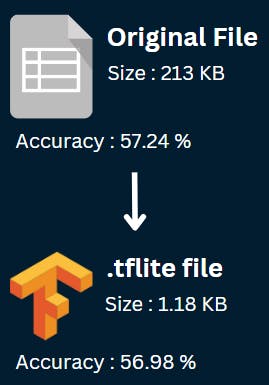

Model Accuracy

There was a slight change in the accuracy of the model.

The goal here is to show how to compress the size of the model, so as not to focus much on the accuracy part.

Now we have a model, how can we use this model in Arduino code. `model.tflite` is the file generated from the python code.

#include <TensorFlowLite.h>

// Load the model into a TensorFlow Lite interpreter

tflite::FlatBufferModel model("model.tflite");

tflite::ops::builtin::BuiltinOpResolver resolver;

tflite::InterpreterBuilder builder(model, resolver);

std::unique_ptr<tflite::Interpreter> interpreter;

builder(&interpreter);

interpreter->AllocateTensors();

// Get pointers to the input and output tensors

TfLiteTensor* input = interpreter->input(0);

TfLiteTensor* output = interpreter->output(0);

// Create a buffer to hold the input data

float input_data[3] = { 45,40,8 };

// Fill the input tensor with data

memcpy(input->data.f, input_data, sizeof(input_data));

// Run the model

interpreter->Invoke();

// Get the results from the output tensor

float* result = output->data.f;

for (int i = 0; i < 3; i++) {

int prediction = round(result[i]);

Serial.print("Prediction for input ");

Serial.print(i);

Serial.print(" is ");

Serial.println(prediction);

}

To process the image, use the following piece of code. Make sure you have a camera connected to ESP32. This code converts the image to a matrix form that can be used as an input for your model.

#include <Adafruit_GFX.h>

#include <Adafruit_SSD1306.h>

#include <Wire.h>

#include <SPI.h>

#include <Adafruit_VC0706.h>

Adafruit_VC0706 cam = Adafruit_VC0706(&Serial1);

void setup() {

Serial.begin(115200);

if (!cam.begin()) {

Serial.println("Couldn't find camera");

while (1);

}

// Take a photo

if (!cam.takePicture()) {

Serial.println("Failed to take picture");

while (1);

}

// Read the image data

uint8_t *image;

uint16_t jpglen = cam.frameLength();

image = new uint8_t[jpglen];

if (!cam.readPicture(image, jpglen)) {

Serial.println("Failed to read picture");

while (1);

}

// Convert the image to a matrix

int rows = cam.getSize().height;

int cols = cam.getSize().width;

uint8_t matrix[rows][cols];

int index = 0;

for (int i = 0; i < rows; i++) {

for (int j = 0; j < cols; j++) {

matrix[i][j] = image[index];

index++;

}

}

// Use the matrix as desired

// ...

delete[] image;

}

void loop() {

// nothing to do here

}

Challenges

Finding the working code available: Have to work with lots of hit and trial codes

Model compatibility with .tflite library version in Arduino: Since .tflite is still in the development process, its version compatibility is a major problem

Handling tensors in C++ is a bit complex: Working with tensors and matrices in C++ is challenging, as C++ is closer to CPU than Python.

In conclusion, TFLite and Edge computing are revolutionizing the way we approach machine learning, making it possible to deploy complex models on low-power devices. This opens up exciting new possibilities for creating intelligent applications that can run on a wide range of devices, from smartphones and wearables to industrial equipment and IoT devices. The future of TFLite and Edge computing is bright, and it will be exciting to see how developers leverage this technology to build the next generation of smart and connected devices. Whether you're a seasoned machine learning practitioner or just starting to explore this field, the benefits of TFLite and Edge computing are undeniable. Get ready to witness a world of limitless possibilities, where devices are no longer just smart, but truly intelligent.

For any queries, feel free to write in the comments or reach out to me over different social media platforms. Know more at https://www.lakshaykumar.tech/